|

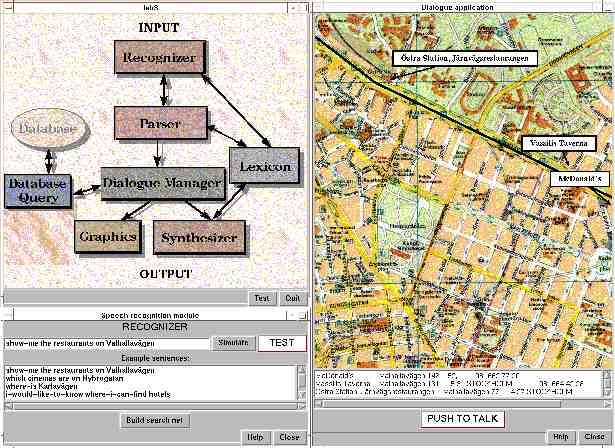

The WAXHOLM human-machine dialogue demonstrator is built on a generic framework

for human/machine spoken dialogue under continuous development at the speech

group at the department for Speech, Music and Hearing of KTH. The domain

of the WAXHOLM application is boat traffic and tourist information about

hotels, camping grounds, and restaurants in the Stockholm archipelago.

The application database includes timetables for a fleet of some twenty

boats from the Waxholm company, which connects about two hundred ports.

The user input to the system is spoken language exclusively, but the responses

from the system include synthetic speech as well as pictures, maps, charts

and timetables (see Figure 16).

The ASR module of the system, described in detail in Paper 4, has a domain-dependent

vocabulary of about 1000 words. The application has similarities to the

ATIS domain within the ARPA community, the Voyager system from MIT (Glass

et al., 1995) and European systems such as SUNDIAL (Peckham, 1993), Philips’s

train timetable information system (Aust et al., 1994) and the Danish dialogue

project (Dalsgaard and Baekgaard, 1994). Summaries of the WAXHOLM dialogue

system and the WAXHOLM project database can be found in (Bertenstam et

al. 1995a,b) and an early reference is Blomberg et al. (1993).

The demonstration system is currently mature enough to be displayed and

tested outside the laboratory by completely novice users. A successful

such attempt was made at "Tekniska Mässan" (the technology fair) in

Älvsjö in October ’96. Visitors with no prior experience with

the system were invited to try the demonstrator in a rather noisy environment.

|

Figure 16. Overview

of the WAXHOLM demonstrator system. See the main text for details. The

ASR module of the system, described in detail in Paper 4, has a domain

dependent vocabulary of about 1000 words. The application has similarities

to the ATIS domain within the ARPA community, the Voyager system from MIT

(Glass et al., 1995) and European systems such as SUNDIAL (Peckham, 1993),

Philips's train timetable information system (Aust et al., 1994) and the

Danish dialogue project (Dalsgaard & Baekgaard, 1994). Summaries of

the WAXHOLM dialogue system and the WAXHOLM project database can be found

in (Bertenstam et al. 1995a,b) and an early reference is Blomberg et al.

(1993).

Figure 16. Overview

of the WAXHOLM demonstrator system. See the main text for details. The

ASR module of the system, described in detail in Paper 4, has a domain

dependent vocabulary of about 1000 words. The application has similarities

to the ATIS domain within the ARPA community, the Voyager system from MIT

(Glass et al., 1995) and European systems such as SUNDIAL (Peckham, 1993),

Philips's train timetable information system (Aust et al., 1994) and the

Danish dialogue project (Dalsgaard & Baekgaard, 1994). Summaries of

the WAXHOLM dialogue system and the WAXHOLM project database can be found

in (Bertenstam et al. 1995a,b) and an early reference is Blomberg et al.

(1993). |